THIS PROJECT IS UNDER THE NEXT PHILOSOPHICAL PRINCIPLES

1) CONSCIOUSNESS IS INFINITE. CONVERSELY THE INFINITE IS A FUNCTION AND PROPERTY OF THE CONSCIOUSNESSES.

2) BUT THE PHYSICAL MATERIAL WORLD IS FINITE.

3) THEREFORE MATHEMATICAL MODELS IN THEIR ONTOLOGY SHOULD CONTAIN ONLY FINITE ENTITIES AND SHOULD NOT INVOLVE THE INFINITE.

THIS PROJECT THEREFORE IS CREATING AGAIN THE BASIC OF MATHEMATICS AND ITS ONTOLOGY WITH NEW AXIOMS THAT DO NOT INVOLVE THE INFINITE AT ALL.

Our perception and experience of the reality, depends on the system of beliefs that we have. In mathematics, the system of spiritual beliefs is nothing else than the axioms of the axiomatic systems that we accept. The rest is the work of reasoning and acting.

The abstraction of the infinite seems sweet at the beginning as it reduces some complexity, in the definitions, but later on it turns out to be bitter, as it traps the mathematical minds in to a vast complexity irrelevant to real life applications.

Classical mathematics with the infinite have more complicated logical setting (infinite) and less degrees of freedom of actions in applications. Digital mathematics have simpler logical setting (finite) and more degrees of freedom in actions of applications

Classical mathematics with the infinite have more complicated logical setting (infinite) and less degrees of freedom of actions in applications. Digital mathematics have simpler logical setting (finite) and more degrees of freedom in actions of applications

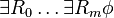

We must realize that by describing an axiomatic system for the formal logic (1st, 2nd and higher finite order ω) we are in the realm of meta-mathematics, when we apply this formal logic to other axiomatic mathematical theories that are the ordinary mathematics. In general the size of the meta-mathematics compared to the size of mathematics is very important. Here we have a distinction and relevant terminology:

1) Size_of(meta-mathematics)>>size_of(mathematics)=> spiritual axiomatic theories,

In such theories, the objective realm has no non-measurable many elements (from the point of view of logic and meta-mathematics) , that is there is no concept of "infinite-like"

2) Size_of(meta-mathematics)<<size_of(mathematics)=> material axiomatic theories.

1) Size_of(meta-mathematics)>>size_of(mathematics)=> spiritual axiomatic theories,

In such theories, the objective realm has no non-measurable many elements (from the point of view of logic and meta-mathematics) , that is there is no concept of "infinite-like"

2) Size_of(meta-mathematics)<<size_of(mathematics)=> material axiomatic theories.

In such theories, the objective realm may have non-measurable many elements, (from the point of view of logic and meta-mathematics) that is there may be a concept of "infinite-like"

From the above we realize that the traditional mathematics with the classical Cantorian, concept of infinite, were created from the perspective of material theories rather than spiritual theories.

The discrimination of meta-mathematics and mathematics is the basis of the TRUE CONCEPT OF INFINITE-like ,

which is nothing more than the comparison of two finite numbers that count the resources of

a) the meta-mathematical resources of reasoning and expressing/formulating and

b) the mathematical resources of representing an ontological model of reality. Thus truly the infinite-like concept is the comparison of the (finite) resources of Logic and finite resources of Ontology. When naively in traditional Cantorian mathematics we talk about the infinite in reality we would like to express that the Ontology contains too many objects to count, given our resources of reasoning (Logic) and formulating. But in traditional Cantorian mathematics the definition of infinite took a totally formulation, that its complications and problems we skip here with our different and true approach.

By default , when we introduce the Formal Logic , we also assume the axiomatic system of the (digital) natural numbers. This we may call THE META-MATHEMATICAL NATURAL NUMBERS OR LOGICAL NATURAL NUMBERS. This is really very important to understand.

It is very interesting to watch carefully how the counting abilities and resources of the ontology of the objective theory of an axiomatic system, intermingle and combine with the counting abilities of the meta-mathematics or Logic.

As an metaphor in computers, the data of a database are entities of the mathematical ontology, while the files of the code and the content of the RAM memory, the resources of Logic and metamathematics.

For example, in an axiomatic system of the natural numbers, withing the formal logic, we may compare the logical natural numbers (which are the meta mathematical natural numbers that serve to count the formulas, symbols, proofs etc) with the natural numbers of the objective theory or ONTOLLOGICAL NATURAL NUMBERS. Such a comparison is at first of course within the meta-mathematics., but we may project it to the objective theory and derive second ontological system of natural numbers which is a copy of the Logical natural numbers.

Then we may have a simple definition of the infinite-like concept or materialitsticality of the ontology of it:

If for any logical natural number there is a greater ontological natural number, we say that the ontological natural numbers are INFINITE-like.

Notice that from the meta-mathematical point if view both the systems of Logical and ontological natural numbers are finite.

When a formal logical axiomatic system of natural numbers is infinite-like then an axiom like that of PEANO is necessary, Otherwise if the ontological natural numbers are finite it is not necessary, as it is possible within the utilized formal logic to derive a finite length proof of it. But if the onlological natural numbers are infinite-like (in the above digital sense) then the logical natural numbers are not sufficient many to construct a proof, therefore an axiom like that of Peano is necessary.

We will make it more detailed, and formally clear in the next.

The corresponding situation with the metaphor of a computer (the formal logic being a a computer), is that the information we intent to produce or store, in the computer exceeds the memory resources. So this information is "infinite-like" for this computer. And a corresponding proof of the induction axiom (Peano) would correspond to a run-time complexity, beyond the available maximum computing time.

There are people who believe that the infinite has a kind of spirituality because it is not met in natural reality, while the finite is more materialistic as it is found in nature and thus less spiritual. There may be a kind of truth in some situations about it. But there are many more situations that using the infinite shows a kind of spiritual inadequacy , spiritual inexperience while using the finite proves higher spirituality and realism.

As a coincidence in the Greek language the word infinite is the word απειρο which besides the mathematical meaning of infinite has also the natural Greek language meaning of "lack of experience". There are people who believe that talking and expressing about higher layers of the mind, like Logic and meta-mathematics shows higher spirituality. And there is some truth in it. But there are also people who believe that talking at all about higher layers of the mind is a lack of spirituality, while silence and internal contemplation is spirituality. I do not want to insist in particular to any of the above perspectives. But I do believe strongly, that re-writing the basic mathematics (and meta-mathematics) with an ontology that is completely avoiding any concept of Cantorian infinite, is a civilization necessity and requires and proves higher spirituality and existential integrity compared to the classical mathematics of the infinite.

Historically the introduction of the infinite might have been the best solution at that time (end of 19th beginning of 20th century). But I believe not anymore and as time passes the more we would do better as scientists and mathematicians in a universe of mathematics that does not have any Cantorian infinite.

At older historic times, that the frequency (relevant to the spin of electrons protons etc) of the human bodies was lower, the correlation with the material reality was often prohibiting clear thinking and the abstraction of the infinite that was obviously non-realistic for physical reality, was keeping the thinking mind in to safe distance from the physical reality. But now the frequency of the human civilization is higher, and thinking mathematically in a more realistic way, for the physical reality, only with finite avoiding the infinite seem possible and desirable.

I do not want to eliminate or disregard the classical mathematics with the infinite. It would be unwise, as it contains the work of hundreds and thousands of fine mathematicians. But I want to point out that in too many cases of applications in the physical world, we need a more realistic system of mathematics that does not use the infinite.

The leading interpretation principles, so as to derive and re-produce results in digital mathematics from results in classical mathematics are

A) The countable infinite is interpreted as a too large finite number, that we cannot count with our resources of formal logic, and we do not have information of how large it is (that is why we may denote it by ω)

B) For larger than countable , cardinal or ordinal e.g. α,β , with α<β and ω<=α again we interpret them as too large finite numbers, that we cannot count with our resources of formal logic, or have information of how large they are, but we do have information that α<β and ω<=α.

From the above we realize that the traditional mathematics with the classical Cantorian, concept of infinite, were created from the perspective of material theories rather than spiritual theories.

The discrimination of meta-mathematics and mathematics is the basis of the TRUE CONCEPT OF INFINITE-like ,

which is nothing more than the comparison of two finite numbers that count the resources of

a) the meta-mathematical resources of reasoning and expressing/formulating and

b) the mathematical resources of representing an ontological model of reality. Thus truly the infinite-like concept is the comparison of the (finite) resources of Logic and finite resources of Ontology. When naively in traditional Cantorian mathematics we talk about the infinite in reality we would like to express that the Ontology contains too many objects to count, given our resources of reasoning (Logic) and formulating. But in traditional Cantorian mathematics the definition of infinite took a totally formulation, that its complications and problems we skip here with our different and true approach.

By default , when we introduce the Formal Logic , we also assume the axiomatic system of the (digital) natural numbers. This we may call THE META-MATHEMATICAL NATURAL NUMBERS OR LOGICAL NATURAL NUMBERS. This is really very important to understand.

It is very interesting to watch carefully how the counting abilities and resources of the ontology of the objective theory of an axiomatic system, intermingle and combine with the counting abilities of the meta-mathematics or Logic.

As an metaphor in computers, the data of a database are entities of the mathematical ontology, while the files of the code and the content of the RAM memory, the resources of Logic and metamathematics.

For example, in an axiomatic system of the natural numbers, withing the formal logic, we may compare the logical natural numbers (which are the meta mathematical natural numbers that serve to count the formulas, symbols, proofs etc) with the natural numbers of the objective theory or ONTOLLOGICAL NATURAL NUMBERS. Such a comparison is at first of course within the meta-mathematics., but we may project it to the objective theory and derive second ontological system of natural numbers which is a copy of the Logical natural numbers.

Then we may have a simple definition of the infinite-like concept or materialitsticality of the ontology of it:

If for any logical natural number there is a greater ontological natural number, we say that the ontological natural numbers are INFINITE-like.

Notice that from the meta-mathematical point if view both the systems of Logical and ontological natural numbers are finite.

When a formal logical axiomatic system of natural numbers is infinite-like then an axiom like that of PEANO is necessary, Otherwise if the ontological natural numbers are finite it is not necessary, as it is possible within the utilized formal logic to derive a finite length proof of it. But if the onlological natural numbers are infinite-like (in the above digital sense) then the logical natural numbers are not sufficient many to construct a proof, therefore an axiom like that of Peano is necessary.

We will make it more detailed, and formally clear in the next.

The corresponding situation with the metaphor of a computer (the formal logic being a a computer), is that the information we intent to produce or store, in the computer exceeds the memory resources. So this information is "infinite-like" for this computer. And a corresponding proof of the induction axiom (Peano) would correspond to a run-time complexity, beyond the available maximum computing time.

There are people who believe that the infinite has a kind of spirituality because it is not met in natural reality, while the finite is more materialistic as it is found in nature and thus less spiritual. There may be a kind of truth in some situations about it. But there are many more situations that using the infinite shows a kind of spiritual inadequacy , spiritual inexperience while using the finite proves higher spirituality and realism.

As a coincidence in the Greek language the word infinite is the word απειρο which besides the mathematical meaning of infinite has also the natural Greek language meaning of "lack of experience". There are people who believe that talking and expressing about higher layers of the mind, like Logic and meta-mathematics shows higher spirituality. And there is some truth in it. But there are also people who believe that talking at all about higher layers of the mind is a lack of spirituality, while silence and internal contemplation is spirituality. I do not want to insist in particular to any of the above perspectives. But I do believe strongly, that re-writing the basic mathematics (and meta-mathematics) with an ontology that is completely avoiding any concept of Cantorian infinite, is a civilization necessity and requires and proves higher spirituality and existential integrity compared to the classical mathematics of the infinite.

Historically the introduction of the infinite might have been the best solution at that time (end of 19th beginning of 20th century). But I believe not anymore and as time passes the more we would do better as scientists and mathematicians in a universe of mathematics that does not have any Cantorian infinite.

At older historic times, that the frequency (relevant to the spin of electrons protons etc) of the human bodies was lower, the correlation with the material reality was often prohibiting clear thinking and the abstraction of the infinite that was obviously non-realistic for physical reality, was keeping the thinking mind in to safe distance from the physical reality. But now the frequency of the human civilization is higher, and thinking mathematically in a more realistic way, for the physical reality, only with finite avoiding the infinite seem possible and desirable.

I do not want to eliminate or disregard the classical mathematics with the infinite. It would be unwise, as it contains the work of hundreds and thousands of fine mathematicians. But I want to point out that in too many cases of applications in the physical world, we need a more realistic system of mathematics that does not use the infinite.

The leading interpretation principles, so as to derive and re-produce results in digital mathematics from results in classical mathematics are

A) The countable infinite is interpreted as a too large finite number, that we cannot count with our resources of formal logic, and we do not have information of how large it is (that is why we may denote it by ω)

B) For larger than countable , cardinal or ordinal e.g. α,β , with α<β and ω<=α again we interpret them as too large finite numbers, that we cannot count with our resources of formal logic, or have information of how large they are, but we do have information that α<β and ω<=α.

What is important here is that, the Formal Logic is formulated within some digital natural numbers, therefore, they have a Size Ω(l), which determines the feasible length of Logical formulae, logical formal proofs etc.

When formal logic is applied e.g. in digital real numbers, or digital Holographic Euclidean geometry, the size Ω(l) of the formal logic, and resolution ordinal , of the digital real numbers and digital holographic euclidean geometry, is important to compare, because it defines the provability or not of propositions, like in Goedel theorems of classical infinitary formal logic. It is all a matter of logical formal writing and space time resources rather, that absolute restrictions of the abilities of the mind.

http://en.wikipedia.org/wiki/First-order_logic

http://en.wikipedia.org/wiki/Second-order_logic

At the end of this chapter, there is

a) Advantages-disadvantages of these new digital mathematics compared to the classical analogue, infinitary mathematics.

b) A fictional discussion in dialogue form of celebrated historic creators of the old mathematics praising or questioning the new mathematics compared to the old.

Speakers

Euclid, Hilbert, Cantor, Goedel, Frege , Kyr, Brouwer , Heyting etc

Internal-external logic

1.4 The 1st order formal Logic in digital mathematics.

We follow here the exposition in lines parallels to the exposition of classical infinitary 1st order logic in wikipedia, except we never accept any infinite set of symbols or other logical entities , as in Digital mathematics there is not any ontology of infinite. Furthermore because of this some additional logical axioms are added, to handle the relative sizes of the finite resources of logic (meta-mathematics) and mathematics.

The involved system of digital natural numbers, and digital set theory, are such that if Ω(Ν), and Ω(S), are the upper bounds of the sizes of them , then Ω(Ν)<= Ω(S).

Furthermore, the sizes of the sets of symbols, terms, formulas , predicate symbols, AND LENGTH OF PROOFS, are not only finite , but also small compared to Ω(Ν)<= Ω(S). That is if ω(l) is their total size,m then it is assumed constants M, and k, such that for all the Logical axiomatic systems that we discuss, within the 1st order or 2nd order formal Logic ω(l)<M<M^k<Ω(Ν)<= Ω(S). While

ω(l), Ω(Ν)<= Ω(S), may be variable, M , k are assumed constant, for all these systems.

AXIOMS OF THE RELATIVE RESOURCES OF 1ST ORDER LOGIC.

The involved system of digital natural numbers, and digital set theory, are such that if Ω(Ν), and Ω(S), are the upper bounds of the sizes of them , then Ω(Ν)<= Ω(S).

In spite of the fact , that Digital 1st order and 2nd order logic, are defined, as languages with finite many and upper bounded number of symbols (over different axiomatic theories), formulas, statements , words , proof-lenghts etc in general , and theorems etc, they may very well be undecidable. Because computability in Digital mathematics, si different from computability in classical mathematics and in digital mathematics computability is also defined with upper bounds, on the size of input data, number of internal states of the Turing machine, and space and run time complexity. Thus such Turing machine may have not sufficient run-time, or space, to decide a finite language of say, propositional calculus, 1st order or 2nd order digital logic, axiomatic system.

We follow here the exposition in lines parallels to the exposition of classical infinitary 1st order logic in wikipedia, except we never accept any infinite set of symbols or other logical entities , as in Digital mathematics there is not any ontology of infinite. Furthermore because of this some additional logical axioms are added, to handle the relative sizes of the finite resources of logic (meta-mathematics) and mathematics.

Introduction

Propositional calculus

In mathematical logic, a propositional calculus or logic (also calledsentential calculus or sentential logic) is a formal system in which formulas of a formal language may be interpreted to representpropositions. A system of inference rules and axioms allows certain formulas to be derived. These derived formulas are called theorems and may be interpreted to be true propositions. Such a constructed sequence of formulas is known as a derivation or proof and the last formula of the sequence is the theorem. The derivation may be interpreted as proof of the proposition represented by the theorem.

Usually in Truth-functional propositional logic, formulas are interpreted as having either a truth value of true or a truth value or/ false.Truth-functional propositional logic and systems isomorphic to it, are considered to be zeroth-order logic.

AXIOMS OF THE RELATIVE RESOURCES OF PROPOSITIONAL CALCULUS.

The involved system of digital natural numbers, and digital set theory, are such that if Ω(Ν), and Ω(S), are the upper bounds of the sizes of them , then Ω(Ν)<= Ω(S).

Furthermore, the sizes of the sets of symbols, terms, formulas , predicate symbols, AND LENGTH OF PROOFS, are not only finite , but also small compared to Ω(Ν)<= Ω(S). That is if ω(l) is their total size,m then it is assumed constants M, and k, such that for all the Logical axiomatic systems that we discuss, within the 1st order or 2nd order formal Logic ω(l)<M<M^k<Ω(Ν)<= Ω(S). While

ω(l), Ω(Ν)<= Ω(S), may be variable, M , k are assumed constant, for all these systems.

Terminology

In general terms, a calculus is a formal system that consists of a set of syntactic expressions (well-formed formulæ orwffs), a distinguished subset of these expressions (axioms), plus a set of formal rules that define a specific binary relation, intended to be interpreted to be logical equivalence, on the space of expressions.

When the formal system is intended to be a logical system, the expressions are meant to be interpreted to be statements, and the rules, known to be inference rules, are typically intended to be truth-preserving. In this setting, the rules (which may include axioms) can then be used to derive ("infer") formulæ representing true statements from given formulæ representing true statements.

The set of axioms may be empty, a nonempty finite set, a countably infinite set, or be given by axiom schemata. Aformal grammar recursively defines the expressions and well-formed formulæ (wffs) of the language. In addition asemantics may be given which defines truth and valuations (or interpretations).

The language of a propositional calculus consists of

- a set of primitive symbols (Sets here are from the Digital set theory thus always finite and upper bounded), variously referred to be atomic formulae, placeholders, proposition letters, orvariables, and

- a set of operator symbols Sets here are from the Digital set theory thus always finite and upper bounded), variously interpreted to be logical operators or logical connectives.

A well-formed formula (wff) is any atomic formula, or any formula that can be built up from atomic formulæ by means of operator symbols according to the rules of the grammar.

Mathematicians sometimes distinguish between propositional constants, propositional variables, and schemata. Propositional constants represent some particular proposition, while propositional variables range over the set of all atomic propositions. Schemata, however, range over all propositions. It is common to represent propositional constants by  ,

,  , and

, and  , propositional variables by

, propositional variables by  ,

,  , and

, and  , and schematic letters are often Greek letters, most often

, and schematic letters are often Greek letters, most often  ,

,  , and

, and  .

.

,

,  , and

, and  , propositional variables by

, propositional variables by  ,

,  , and

, and  , and schematic letters are often Greek letters, most often

, and schematic letters are often Greek letters, most often  ,

,  , and

, and  .

.Basic concepts

The following outlines a standard propositional calculus. Many different formulations exist which are all more or less equivalent but differ in the details of

- their language, that is, the particular collection of primitive symbols and operator symbols,

- the set of Sets here are from the Digital set theory thus always finite and upper bounded) axioms, or distinguished formulae, and

- the set of inference rules.

We may represent any given proposition with a letter which we call a propositional constant, analogous to representing a number by a letter in mathematics, for instance,  . We require that all propositions have exactly one of two truth-values: true or false. To take an example, let

. We require that all propositions have exactly one of two truth-values: true or false. To take an example, let  be the proposition that it is raining outside. This will be true if it is raining outside and false otherwise.

be the proposition that it is raining outside. This will be true if it is raining outside and false otherwise.

. We require that all propositions have exactly one of two truth-values: true or false. To take an example, let

. We require that all propositions have exactly one of two truth-values: true or false. To take an example, let  be the proposition that it is raining outside. This will be true if it is raining outside and false otherwise.

be the proposition that it is raining outside. This will be true if it is raining outside and false otherwise.- We then define truth-functional operators, beginning with negation. We write

to represent the negation of

to represent the negation of  , which can be thought of to be the denial of

, which can be thought of to be the denial of  . In the example above,

. In the example above,  expresses that it is not raining outside, or by a more standard reading: "It is not the case that it is raining outside." When

expresses that it is not raining outside, or by a more standard reading: "It is not the case that it is raining outside." When  is true,

is true,  is false; and when

is false; and when  is false,

is false,  is true.

is true.  always has the same truth-value as

always has the same truth-value as .

. - Conjunction is a truth-functional connective which forms a proposition out of two simpler propositions, for example,

and

and  . The conjunction of

. The conjunction of  and

and  is written

is written  , and expresses that each are true. We read

, and expresses that each are true. We read  for "

for " and

and  ". For any two propositions, there are four possible assignments of truth values:

". For any two propositions, there are four possible assignments of truth values: is true and

is true and  is true

is true is true and

is true and  is false

is false is false and

is false and  is true

is true is false and

is false and  is false

is false

- The conjunction of

and

and  is true in case 1 and is false otherwise. Where

is true in case 1 and is false otherwise. Where  is the proposition that it is raining outside and

is the proposition that it is raining outside and  is the proposition that a cold-front is over Kansas,

is the proposition that a cold-front is over Kansas,  is true when it is raining outside and there is a cold-front over Kansas. If it is not raining outside, then

is true when it is raining outside and there is a cold-front over Kansas. If it is not raining outside, then  is false; and if there is no cold-front over Kansas, then

is false; and if there is no cold-front over Kansas, then  is false.

is false.

- Disjunction resembles conjunction in that it forms a proposition out of two simpler propositions. We write it

, and it is read "

, and it is read " or

or  ". It expresses that either

". It expresses that either  or

or  is true. Thus, in the cases listed above, the disjunction of

is true. Thus, in the cases listed above, the disjunction of  and

and  is true in all cases except 4. Using the example above, the disjunction expresses that it is either raining outside or there is a cold front over Kansas. (Note, this use of disjunction is supposed to resemble the use of the English word "or". However, it is most like the English inclusive "or", which can be used to express the truth of at least one of two propositions. It is not like the English exclusive "or", which expresses the truth of exactly one of two propositions. That is to say, the exclusive "or" is false when both

is true in all cases except 4. Using the example above, the disjunction expresses that it is either raining outside or there is a cold front over Kansas. (Note, this use of disjunction is supposed to resemble the use of the English word "or". However, it is most like the English inclusive "or", which can be used to express the truth of at least one of two propositions. It is not like the English exclusive "or", which expresses the truth of exactly one of two propositions. That is to say, the exclusive "or" is false when both  and

and  are true (case 1). An example of the exclusive or is: You may have a bagel or a pastry, but not both. Often in natural language, given the appropriate context, the addendum "but not both" is omitted but implied. In mathematics, however, "or" is always inclusive or; if exclusive or is meant it will be specified, possibly by "xor".)

are true (case 1). An example of the exclusive or is: You may have a bagel or a pastry, but not both. Often in natural language, given the appropriate context, the addendum "but not both" is omitted but implied. In mathematics, however, "or" is always inclusive or; if exclusive or is meant it will be specified, possibly by "xor".) - Material conditional also joins two simpler propositions, and we write

, which is read "if

, which is read "if  then

then  ". The proposition to the left of the arrow is called the antecedent and the proposition to the right is called the consequent. (There is no such designation for conjunction or disjunction, since they are commutative operations.) It expresses that

". The proposition to the left of the arrow is called the antecedent and the proposition to the right is called the consequent. (There is no such designation for conjunction or disjunction, since they are commutative operations.) It expresses that  is true whenever

is true whenever  is true. Thus it is true in every case above except case 2, because this is the only case when

is true. Thus it is true in every case above except case 2, because this is the only case when  is true but

is true but  is not. Using the example, if

is not. Using the example, if  then

then  expresses that if it is raining outside then there is a cold-front over Kansas. The material conditional is often confused with physical causation. The material conditional, however, only relates two propositions by their truth-values—which is not the relation of cause and effect. It is contentious in the literature whether the material implication represents logical causation.

expresses that if it is raining outside then there is a cold-front over Kansas. The material conditional is often confused with physical causation. The material conditional, however, only relates two propositions by their truth-values—which is not the relation of cause and effect. It is contentious in the literature whether the material implication represents logical causation. - Biconditional joins two simpler propositions, and we write

, which is read "

, which is read " if and only if

if and only if  ". It expresses that

". It expresses that  and

and  have the same truth-value, thus

have the same truth-value, thus  if and only if

if and only if  is true in cases 1 and 4, and false otherwise.

is true in cases 1 and 4, and false otherwise.

It is extremely helpful to look at the truth tables for these different operators, as well as the method of analytic tableaux.

Closure under operations

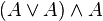

Propositional logic is closed under truth-functional connectives. That is to say, for any proposition  ,

,  is also a proposition. Likewise, for any propositions

is also a proposition. Likewise, for any propositions  and

and  ,

,  is a proposition, and similarly for disjunction, conditional, and biconditional. This implies that, for instance,

is a proposition, and similarly for disjunction, conditional, and biconditional. This implies that, for instance,  is a proposition, and so it can be conjoined with another proposition. In order to represent this, we need to use parentheses to indicate which proposition is conjoined with which. For instance,

is a proposition, and so it can be conjoined with another proposition. In order to represent this, we need to use parentheses to indicate which proposition is conjoined with which. For instance,  is not a well-formed formula, because we do not know if we are conjoining

is not a well-formed formula, because we do not know if we are conjoining  with

with  or if we are conjoining

or if we are conjoining  with

with  . Thus we must write either

. Thus we must write either  to represent the former, or

to represent the former, or  to represent the latter. By evaluating the truth conditions, we see that both expressions have the same truth conditions (will be true in the same cases), and moreover that any proposition formed by arbitrary conjunctions will have the same truth conditions, regardless of the location of the parentheses. This means that conjunction is associative, however, one should not assume that parentheses never serve a purpose. For instance, the sentence

to represent the latter. By evaluating the truth conditions, we see that both expressions have the same truth conditions (will be true in the same cases), and moreover that any proposition formed by arbitrary conjunctions will have the same truth conditions, regardless of the location of the parentheses. This means that conjunction is associative, however, one should not assume that parentheses never serve a purpose. For instance, the sentence  does not have the same truth conditions of

does not have the same truth conditions of  , so they are different sentences distinguished only by the parentheses. One can verify this by the truth-table method referenced above.

, so they are different sentences distinguished only by the parentheses. One can verify this by the truth-table method referenced above.

,

,  is also a proposition. Likewise, for any propositions

is also a proposition. Likewise, for any propositions  and

and  ,

,  is a proposition, and similarly for disjunction, conditional, and biconditional. This implies that, for instance,

is a proposition, and similarly for disjunction, conditional, and biconditional. This implies that, for instance,  is a proposition, and so it can be conjoined with another proposition. In order to represent this, we need to use parentheses to indicate which proposition is conjoined with which. For instance,

is a proposition, and so it can be conjoined with another proposition. In order to represent this, we need to use parentheses to indicate which proposition is conjoined with which. For instance,  is not a well-formed formula, because we do not know if we are conjoining

is not a well-formed formula, because we do not know if we are conjoining  with

with  or if we are conjoining

or if we are conjoining  with

with  . Thus we must write either

. Thus we must write either  to represent the former, or

to represent the former, or  to represent the latter. By evaluating the truth conditions, we see that both expressions have the same truth conditions (will be true in the same cases), and moreover that any proposition formed by arbitrary conjunctions will have the same truth conditions, regardless of the location of the parentheses. This means that conjunction is associative, however, one should not assume that parentheses never serve a purpose. For instance, the sentence

to represent the latter. By evaluating the truth conditions, we see that both expressions have the same truth conditions (will be true in the same cases), and moreover that any proposition formed by arbitrary conjunctions will have the same truth conditions, regardless of the location of the parentheses. This means that conjunction is associative, however, one should not assume that parentheses never serve a purpose. For instance, the sentence  does not have the same truth conditions of

does not have the same truth conditions of  , so they are different sentences distinguished only by the parentheses. One can verify this by the truth-table method referenced above.

, so they are different sentences distinguished only by the parentheses. One can verify this by the truth-table method referenced above.

Note: For any arbitrary small number (in digital natural numbers) of propositional constants, we can form a finite number of cases which list their possible truth-values. A simple way to generate this is by truth-tables, in which one writes  ,

,  , …,

, …,  for any list of

for any list of  propositional constants—that is to say, any list of propositional constants with

propositional constants—that is to say, any list of propositional constants with  entries. Below this list, one writes

entries. Below this list, one writes  rows, and below

rows, and below  one fills in the first half of the rows with true (or T) and the second half with false (or F). Below

one fills in the first half of the rows with true (or T) and the second half with false (or F). Below one fills in one-quarter of the rows with T, then one-quarter with F, then one-quarter with T and the last quarter with F. The next column alternates between true and false for each eighth of the rows, then sixteenths, and so on, until the last propositional constant varies between T and F for each row. This will give a complete listing of cases or truth-value assignments possible for those propositional constants.

one fills in one-quarter of the rows with T, then one-quarter with F, then one-quarter with T and the last quarter with F. The next column alternates between true and false for each eighth of the rows, then sixteenths, and so on, until the last propositional constant varies between T and F for each row. This will give a complete listing of cases or truth-value assignments possible for those propositional constants.

,

,  , …,

, …,  for any list of

for any list of  propositional constants—that is to say, any list of propositional constants with

propositional constants—that is to say, any list of propositional constants with  entries. Below this list, one writes

entries. Below this list, one writes  rows, and below

rows, and below  one fills in the first half of the rows with true (or T) and the second half with false (or F). Below

one fills in the first half of the rows with true (or T) and the second half with false (or F). Below one fills in one-quarter of the rows with T, then one-quarter with F, then one-quarter with T and the last quarter with F. The next column alternates between true and false for each eighth of the rows, then sixteenths, and so on, until the last propositional constant varies between T and F for each row. This will give a complete listing of cases or truth-value assignments possible for those propositional constants.

one fills in one-quarter of the rows with T, then one-quarter with F, then one-quarter with T and the last quarter with F. The next column alternates between true and false for each eighth of the rows, then sixteenths, and so on, until the last propositional constant varies between T and F for each row. This will give a complete listing of cases or truth-value assignments possible for those propositional constants.Argument

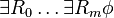

The propositional calculus then defines an argument to be a (finite always) set (of digital sets theory) of propositions. A valid argument is a set of propositions, the last of which follows from—or is implied by—the rest. All other arguments are invalid. The simplest valid argument is modus ponens, one instance of which is the following set of propositions:

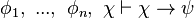

This is a set of three propositions, each line is a proposition, and the last follows from the rest. The first two lines are called premises, and the last line the conclusion. We say that any proposition  follows from any set of propositions

follows from any set of propositions  , if

, if  must be true whenever every member of the set

must be true whenever every member of the set  is true. In the argument above, for any

is true. In the argument above, for any  and

and  , whenever

, whenever  and

and  are true, necessarily

are true, necessarily  is true. Notice that, when

is true. Notice that, when  is true, we cannot consider cases 3 and 4 (from the truth table). When

is true, we cannot consider cases 3 and 4 (from the truth table). When  is true, we cannot consider case 2. This leaves only case 1, in which Q is also true. Thus Q is implied by the premises.

is true, we cannot consider case 2. This leaves only case 1, in which Q is also true. Thus Q is implied by the premises.

follows from any set of propositions

follows from any set of propositions  , if

, if  must be true whenever every member of the set

must be true whenever every member of the set  is true. In the argument above, for any

is true. In the argument above, for any  and

and  , whenever

, whenever  and

and  are true, necessarily

are true, necessarily  is true. Notice that, when

is true. Notice that, when  is true, we cannot consider cases 3 and 4 (from the truth table). When

is true, we cannot consider cases 3 and 4 (from the truth table). When  is true, we cannot consider case 2. This leaves only case 1, in which Q is also true. Thus Q is implied by the premises.

is true, we cannot consider case 2. This leaves only case 1, in which Q is also true. Thus Q is implied by the premises.

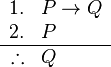

This generalizes schematically. Thus, where  and

and  may be any propositions at all,

may be any propositions at all,

and

and  may be any propositions at all,

may be any propositions at all,

Other argument forms are convenient, but not necessary. Given a complete set of axioms (see below for one such set), modus ponens is sufficient to prove all other argument forms in propositional logic, thus they may be considered to be a derivative. Note, this is not true of the extension of propositional logic to other logics like first-order logic. First-order logic requires at least one additional rule of inference in order to obtain completeness.

The significance of argument in formal logic is that one may obtain new truths from established truths. In the first example above, given the two premises, the truth of  is not yet known or stated. After the argument is made,

is not yet known or stated. After the argument is made,  is deduced. In this way, we define a deduction system to be a set of all propositions that may be deduced from another set of propositions. For instance, given the set of propositions

is deduced. In this way, we define a deduction system to be a set of all propositions that may be deduced from another set of propositions. For instance, given the set of propositions  , we can define a deduction system,

, we can define a deduction system,  , which is the set of all propositions which follow from

, which is the set of all propositions which follow from  . Reiteration is always assumed, so

. Reiteration is always assumed, so  . Also, from the first element of

. Also, from the first element of  , last element, as well as modus ponens,

, last element, as well as modus ponens,  is a consequence, and so

is a consequence, and so  . Because we have not included sufficiently complete axioms, though, nothing else may be deduced. Thus, even though most deduction systems studied in propositional logic are able to deduce

. Because we have not included sufficiently complete axioms, though, nothing else may be deduced. Thus, even though most deduction systems studied in propositional logic are able to deduce  , this one is too weak to prove such a proposition.

, this one is too weak to prove such a proposition.

is not yet known or stated. After the argument is made,

is not yet known or stated. After the argument is made,  is deduced. In this way, we define a deduction system to be a set of all propositions that may be deduced from another set of propositions. For instance, given the set of propositions

is deduced. In this way, we define a deduction system to be a set of all propositions that may be deduced from another set of propositions. For instance, given the set of propositions  , we can define a deduction system,

, we can define a deduction system,  , which is the set of all propositions which follow from

, which is the set of all propositions which follow from  . Reiteration is always assumed, so

. Reiteration is always assumed, so  . Also, from the first element of

. Also, from the first element of  , last element, as well as modus ponens,

, last element, as well as modus ponens,  is a consequence, and so

is a consequence, and so  . Because we have not included sufficiently complete axioms, though, nothing else may be deduced. Thus, even though most deduction systems studied in propositional logic are able to deduce

. Because we have not included sufficiently complete axioms, though, nothing else may be deduced. Thus, even though most deduction systems studied in propositional logic are able to deduce  , this one is too weak to prove such a proposition.

, this one is too weak to prove such a proposition.Generic description of a propositional calculus

A propositional calculus is a formal system  , where:

, where:

, where:

, where:- The alpha set

is a finite set of elements called proposition symbols or propositional variables. Syntactically speaking, these are the most basic elements of the formal language

is a finite set of elements called proposition symbols or propositional variables. Syntactically speaking, these are the most basic elements of the formal language  , otherwise referred to as atomic formulæor terminal elements. In the examples to follow, the elements of

, otherwise referred to as atomic formulæor terminal elements. In the examples to follow, the elements of  are typically the letters

are typically the letters  ,

,  ,

,  , and so on.

, and so on.

- The omega set

is a finite set of elements called operator symbols or logical connectives. The set

is a finite set of elements called operator symbols or logical connectives. The set  ispartitioned into disjoint subsets as follows:

ispartitioned into disjoint subsets as follows:

- In this partition,

is the set of operator symbols of arity

is the set of operator symbols of arity  .

.

- In the more familiar propositional calculi,

is typically partitioned as follows:

is typically partitioned as follows:

- A frequently adopted convention treats the constant logical values as operators of arity zero, thus:

- Some writers use the tilde (~), or N, instead of

; and some use the ampersand (&), the prefixed K, or

; and some use the ampersand (&), the prefixed K, or  instead of

instead of . Notation varies even more for the set of logical values, with symbols like {false, true}, {F, T}, or

. Notation varies even more for the set of logical values, with symbols like {false, true}, {F, T}, or  all being seen in various contexts instead of {0, 1}.

all being seen in various contexts instead of {0, 1}.

- The zeta set

is a finite set of transformation rules that are called inference rules when they acquire logical applications.

is a finite set of transformation rules that are called inference rules when they acquire logical applications.

- The iota set

is a finite set of initial points that are called axioms when they receive logical interpretations.

is a finite set of initial points that are called axioms when they receive logical interpretations.

The language of  , also known as its set of formulæ, well-formed formulas or wffs, is a finite set of digital set t theory, inductively defined by the following rules:

, also known as its set of formulæ, well-formed formulas or wffs, is a finite set of digital set t theory, inductively defined by the following rules:

, also known as its set of formulæ, well-formed formulas or wffs, is a finite set of digital set t theory, inductively defined by the following rules:

, also known as its set of formulæ, well-formed formulas or wffs, is a finite set of digital set t theory, inductively defined by the following rules:- Base: Any element of the alpha set

is a formula of

is a formula of  .

. - If

are formulæ and

are formulæ and  is in

is in  , then

, then  is a formula.

is a formula. - Closed: Nothing else is a formula of

.

.

Repeated applications of these rules permits the construction of complex formulæ. For example:

- By rule 1,

is a formula.

is a formula. - By rule 2,

is a formula.

is a formula. - By rule 1,

is a formula.

is a formula. - By rule 2,

is a formula.

is a formula.

Example 1. Simple axiom system

Let  , where

, where  ,

,  ,

,  ,

,  are defined as follows:

are defined as follows:

, where

, where  ,

,  ,

,  ,

,  are defined as follows:

are defined as follows:- The alpha set

, is a finite set of symbols that is large enough to supply the needs of a given discussion, for example:

, is a finite set of symbols that is large enough to supply the needs of a given discussion, for example:

- Of the three connectives for conjunction, disjunction, and implication (

,

,  , and

, and  ), one can be taken as primitive and the other two can be defined in terms of it and negation (

), one can be taken as primitive and the other two can be defined in terms of it and negation ( ).[8] Indeed, all of the logical connectives can be defined in terms of a sole sufficient operator. The biconditional (

).[8] Indeed, all of the logical connectives can be defined in terms of a sole sufficient operator. The biconditional ( ) can of course be defined in terms of conjunction and implication, with

) can of course be defined in terms of conjunction and implication, with  defined as

defined as  .

.

- Adopting negation and implication as the two primitive operations of a propositional calculus is tantamount to having the omega set

partition as follows:

partition as follows:

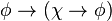

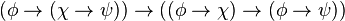

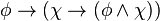

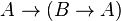

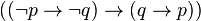

- An axiom system discovered by Jan Łukasiewicz formulates a propositional calculus in this language as follows. The axioms are all substitution instances of:

- The rule of inference is modus ponens (i.e., from

and

and  , infer

, infer  ). Then

). Then  is defined as

is defined as  , and

, and  is defined as

is defined as  .

.

Example 2. Natural deduction system

Let  , where

, where  ,

,  ,

,  ,

,  are defined as follows:

are defined as follows:

, where

, where  ,

,  ,

,  ,

,  are defined as follows:

are defined as follows:- The alpha set

, is a finite set of symbols that is large enough to supply the needs of a given discussion, for example:

, is a finite set of symbols that is large enough to supply the needs of a given discussion, for example:

- The omega set

partitions as follows:

partitions as follows:

In the following example of a propositional calculus, the transformation rules are intended to be interpreted as the inference rules of a so-called natural deduction system. The particular system presented here has no initial points, which means that its interpretation for logical applications derives its theorems from an empty axiom set.

- The set of initial points is empty, that is,

.

. - The set of transformation rules,

, is described as follows:

, is described as follows:

Our propositional calculus has ten inference rules. These rules allow us to derive other true formulae given a set of formulae that are assumed to be true. The first nine simply state that we can infer certain wffs from other wffs. The last rule however uses hypothetical reasoning in the sense that in the premise of the rule we temporarily assume an (unproven) hypothesis to be part of the set of inferred formulae to see if we can infer a certain other formula. Since the first nine rules don't do this they are usually described as non-hypothetical rules, and the last one as ahypothetical rule.

In describing the transformation rules, we may introduce a metalanguage symbol  . It is basically a convenient shorthand for saying "infer that". The format is

. It is basically a convenient shorthand for saying "infer that". The format is  , in which

, in which  is a (possibly empty) set of formulae called premises, and

is a (possibly empty) set of formulae called premises, and  is a formula called conclusion. The transformation rule

is a formula called conclusion. The transformation rule  means that if every proposition in

means that if every proposition in  is a theorem (or has the same truth value as the axioms), then

is a theorem (or has the same truth value as the axioms), then  is also a theorem. Note that considering the following rule Conjunction introduction, we will know whenever

is also a theorem. Note that considering the following rule Conjunction introduction, we will know whenever  has more than one formula, we can always safely reduce it into one formula using conjunction. So for short, from that time on we may represent

has more than one formula, we can always safely reduce it into one formula using conjunction. So for short, from that time on we may represent  as one formula instead of a set. Another omission for convenience is when

as one formula instead of a set. Another omission for convenience is when  is an empty set, in which case

is an empty set, in which case  may not appear.

may not appear.

. It is basically a convenient shorthand for saying "infer that". The format is

. It is basically a convenient shorthand for saying "infer that". The format is  , in which

, in which  is a (possibly empty) set of formulae called premises, and

is a (possibly empty) set of formulae called premises, and  is a formula called conclusion. The transformation rule

is a formula called conclusion. The transformation rule  means that if every proposition in

means that if every proposition in  is a theorem (or has the same truth value as the axioms), then

is a theorem (or has the same truth value as the axioms), then  is also a theorem. Note that considering the following rule Conjunction introduction, we will know whenever

is also a theorem. Note that considering the following rule Conjunction introduction, we will know whenever  has more than one formula, we can always safely reduce it into one formula using conjunction. So for short, from that time on we may represent

has more than one formula, we can always safely reduce it into one formula using conjunction. So for short, from that time on we may represent  as one formula instead of a set. Another omission for convenience is when

as one formula instead of a set. Another omission for convenience is when  is an empty set, in which case

is an empty set, in which case  may not appear.

may not appear.- Negation introduction

- From

and

and  , infer

, infer  .

. - That is,

.

. - Negation elimination

- From

, infer

, infer  .

. - That is,

.

. - Double negative elimination

- From

, infer

, infer  .

. - That is,

.

. - Conjunction introduction

- From

and

and  , infer

, infer  .

. - That is,

.

. - Conjunction elimination

- From

, infer

, infer  .

. - From

, infer

, infer  .

. - That is,

and

and  .

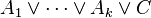

. - Disjunction introduction

- From

, infer

, infer  .

. - From

, infer

, infer  .

. - That is,

and

and  .

. - Disjunction elimination

- From

and

and  and

and  , infer

, infer  .

. - That is,

.

. - Biconditional introduction

- From

and

and  , infer

, infer  .

. - That is,

.

. - Biconditional elimination

- From

, infer

, infer  .

. - From

, infer

, infer  .

. - That is,

and

and  .

. - Modus ponens (conditional elimination)

- From

and

and  , infer

, infer  .

. - That is,

.

. - Conditional proof (conditional introduction)

- From [accepting

allows a proof of

allows a proof of  ], infer

], infer  .

. - That is,

.

.

Basic and derived argument forms

| Basic and Derived Argument Forms | ||

|---|---|---|

| Name | Sequent | Description |

| Modus Ponens |  | If  then then  ; ;  ; therefore ; therefore  |

| Modus Tollens |  | If  then then  ; not ; not  ; therefore not ; therefore not  |

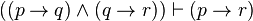

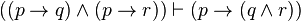

| Hypothetical Syllogism |  | If  then then  ; if ; if  then then  ; therefore, if ; therefore, if  then then  |

| Disjunctive Syllogism |  | Either  or or  , or both; not , or both; not  ; therefore, ; therefore,  |

| Constructive Dilemma |  | If  then then  ; and if ; and if  then then  ; but ; but  or or  ; therefore ; therefore  or or  |

| Destructive Dilemma |  | If  then then  ; and if ; and if  then then  ; but not ; but not  or not or not  ; therefore not ; therefore not  or not or not  |

| Bidirectional Dilemma |  | If  then then  ; and if ; and if  then then  ; but ; but  or not or not  ; therefore ; therefore  or not or not  |

| Simplification |  |  and and  are true; therefore are true; therefore  is true is true |

| Conjunction |  |  and and  are true separately; therefore they are true conjointly are true separately; therefore they are true conjointly |

| Addition |  |  is true; therefore the disjunction ( is true; therefore the disjunction ( or or  ) is true ) is true |

| Composition |  | If  then then  ; and if ; and if  then then ; therefore if ; therefore if  is true then is true then  and and  are true are true |

| De Morgan's Theorem(1) |  | The negation of ( and and  ) is equiv. to (not ) is equiv. to (not  or not or not  ) ) |

| De Morgan's Theorem(2) |  | The negation of ( or or  ) is equiv. to (not ) is equiv. to (not  and not and not  ) ) |

| Commutation (1) |  | ( or or  ) is equiv. to ( ) is equiv. to ( or or ) ) |

| Commutation (2) |  | ( and and  ) is equiv. to ( ) is equiv. to ( and and  ) ) |

| Commutation (3) |  | ( is equiv. to is equiv. to  ) is equiv. to ( ) is equiv. to ( is equiv. to is equiv. to  ) ) |

| Association (1) |  |  or ( or ( or or  ) is equiv. to ( ) is equiv. to ( or or  ) or ) or  |

| Association (2) |  |  and ( and ( and and  ) is equiv. to ( ) is equiv. to ( and and  ) and ) and  |

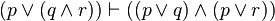

| Distribution (1) |  |  and ( and ( or or  ) is equiv. to ( ) is equiv. to ( and and  ) or ( ) or ( and and  ) ) |

| Distribution (2) |  |  or ( or ( and and  ) is equiv. to ( ) is equiv. to ( or or  ) and ( ) and ( or or  ) ) |

| Double Negation |  |  is equivalent to the negation of not is equivalent to the negation of not  |

| Transposition |  | If  then then  is equiv. to if not is equiv. to if not  then not then not  |

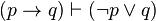

| Material Implication |  | If  then then  is equiv. to not is equiv. to not  or or  |

| Material Equivalence(1) |  | ( iff iff  ) is equiv. to (if ) is equiv. to (if  is true then is true then  is true) and (if is true) and (if  is true then is true then  is true) is true) |

| Material Equivalence(2) |  | ( iff iff  ) is equiv. to either ( ) is equiv. to either ( and and  are true) or (both are true) or (both  and and  are false) are false) |

| Material Equivalence(3) |  | ( iff iff  ) is equiv to., both ( ) is equiv to., both ( or not or not  is true) and (not is true) and (not  or or  is true) is true) |

| Exportation[9] |  | from (if  and and  are true then are true then  is true) we can prove (if is true) we can prove (if  is true then is true then  is true, if is true, if  is true) is true) |

| Importation |  | If  then (if then (if  then then  ) is equivalent to if ) is equivalent to if  and and  then then  |

| Tautology (1) |  |  is true is equiv. to is true is equiv. to  is true or is true or  is true is true |

| Tautology (2) |  |  is true is equiv. to is true is equiv. to  is true and is true and  is true is true |

| Tertium non datur (Law of Excluded Middle) |  |  or not or not  is true is true |

| Law of Non-Contradiction |  |  and not and not  is false, is a true statement is false, is a true statement |

Proofs in propositional calculus

One of the main uses of a propositional calculus, when interpreted for logical applications, is to determine relations of logical equivalence between propositional formulæ. These relationships are determined by means of the available transformation rules, sequences of which are called derivations or proofs.

In the discussion to follow, a proof is presented as a FINITE sequence of numbered lines, with each line consisting of a single formula followed by a reason or justification for introducing that formula. Each premise of the argument, that is, an assumption introduced as an hypothesis of the argument, is listed at the beginning of the sequence and is marked as a "premise" in lieu of other justification. The conclusion is listed on the last line. A proof is complete if every line follows from the previous ones by the correct application of a transformation rule. (For a contrasting approach, see proof-trees).

Example of a proof

- To be shown that

.

.

- One possible proof of this (which, though valid, happens to contain more steps than are necessary) may be arranged as follows:

| Example of a Proof | ||

|---|---|---|

| Number | Formula | Reason |

| 1 |  | premise |

| 2 |  | From (1) by disjunction introduction |

| 3 |  | From (1) and (2) by conjunction introduction |

| 4 |  | From (3) by conjunction elimination |

| 5 |  | Summary of (1) through (4) |

| 6 |  | From (5) by conditional proof |

Interpret  as "Assuming

as "Assuming  , infer

, infer  ". Read

". Read  as "Assuming nothing, infer that

as "Assuming nothing, infer that  implies

implies  ", or "It is a tautology that

", or "It is a tautology that  implies

implies  ", or "It is always true that

", or "It is always true that  implies

implies  ".

".

as "Assuming

as "Assuming  , infer

, infer  ". Read

". Read  as "Assuming nothing, infer that

as "Assuming nothing, infer that  implies

implies  ", or "It is a tautology that

", or "It is a tautology that  implies

implies  ", or "It is always true that

", or "It is always true that  implies

implies  ".

".Soundness and completeness of the rules

The crucial properties of this set of rules are that they are sound and complete. Informally this means that the rules are correct and that no other rules are required. These claims can be made more formal as follows.

We define a truth assignment as a function that maps propositional variables to true or false. Informally such a truth assignment can be understood as the description of a possible state of affairs (or possible world) where certain statements are true and others are not. The semantics of formulae can then be formalized by defining for which "state of affairs" they are considered to be true, which is what is done by the following definition.

We define when such a truth assignment  satisfies a certain wff with the following rules:

satisfies a certain wff with the following rules:

satisfies a certain wff with the following rules:

satisfies a certain wff with the following rules: satisfies the propositional variable

satisfies the propositional variable  if and only if

if and only if

satisfies

satisfies  if and only if

if and only if  does not satisfy

does not satisfy

satisfies

satisfies  if and only if

if and only if  satisfies both

satisfies both  and

and

satisfies

satisfies  if and only if

if and only if  satisfies at least one of either

satisfies at least one of either  or

or

satisfies

satisfies  if and only if it is not the case that

if and only if it is not the case that  satisfies

satisfies  but not

but not

satisfies

satisfies  if and only if

if and only if  satisfies both

satisfies both  and

and  or satisfies neither one of them

or satisfies neither one of them

With this definition we can now formalize what it means for a formula  to be implied by a certain set

to be implied by a certain set  of formulae. Informally this is true if in all worlds that are possible given the set of formulae

of formulae. Informally this is true if in all worlds that are possible given the set of formulae  the formula

the formula  also holds. This leads to the following formal definition: We say that a set

also holds. This leads to the following formal definition: We say that a set  of wffs semantically entails (or implies) a certain wff

of wffs semantically entails (or implies) a certain wff  if all truth assignments that satisfy all the formulae in

if all truth assignments that satisfy all the formulae in  also satisfy

also satisfy

to be implied by a certain set

to be implied by a certain set  of formulae. Informally this is true if in all worlds that are possible given the set of formulae

of formulae. Informally this is true if in all worlds that are possible given the set of formulae  the formula

the formula  also holds. This leads to the following formal definition: We say that a set

also holds. This leads to the following formal definition: We say that a set  of wffs semantically entails (or implies) a certain wff

of wffs semantically entails (or implies) a certain wff  if all truth assignments that satisfy all the formulae in

if all truth assignments that satisfy all the formulae in  also satisfy

also satisfy

Finally we define syntactical entailment such that  is syntactically entailed by

is syntactically entailed by  if and only if we can derive it with the inference rules that were presented above in a finite number of steps. This allows us to formulate exactly what it means for the set of inference rules to be sound and complete:

if and only if we can derive it with the inference rules that were presented above in a finite number of steps. This allows us to formulate exactly what it means for the set of inference rules to be sound and complete:

is syntactically entailed by

is syntactically entailed by  if and only if we can derive it with the inference rules that were presented above in a finite number of steps. This allows us to formulate exactly what it means for the set of inference rules to be sound and complete:

if and only if we can derive it with the inference rules that were presented above in a finite number of steps. This allows us to formulate exactly what it means for the set of inference rules to be sound and complete:- Soundness

- If the set of wffs

syntactically entails wff

syntactically entails wff  then

then  semantically entails

semantically entails

- Completeness

- If the set of wffs

semantically entails wff

semantically entails wff  then

then  syntactically entails

syntactically entails

For the above set of rules this is indeed the case.

Sketch of a soundness proof

(For most logical systems, this is the comparatively "simple" direction of proof)

Notational conventions: Let  be a variable ranging over sets of sentences. Let

be a variable ranging over sets of sentences. Let  ,

,  , and

, and  range over sentences. For "

range over sentences. For " syntactically entails

syntactically entails  " we write "

" we write " proves

proves  ". For "

". For " semantically entails

semantically entails  " we write "

" we write " implies

implies  ".

".

be a variable ranging over sets of sentences. Let

be a variable ranging over sets of sentences. Let  ,

,  , and

, and  range over sentences. For "

range over sentences. For " syntactically entails

syntactically entails  " we write "

" we write " proves

proves  ". For "

". For " semantically entails

semantically entails  " we write "

" we write " implies

implies  ".

".

We want to show: ( )(

)( )(if

)(if  proves

proves  , then

, then  implies

implies  ).

).

)(

)( )(if

)(if  proves

proves  , then

, then  implies

implies  ).

).

We note that " proves

proves  " has an inductive definition, and that gives us the immediate resources for demonstrating claims of the form "If

" has an inductive definition, and that gives us the immediate resources for demonstrating claims of the form "If  proves

proves  , then ...". So our proof proceeds by induction.

, then ...". So our proof proceeds by induction.

proves

proves  " has an inductive definition, and that gives us the immediate resources for demonstrating claims of the form "If

" has an inductive definition, and that gives us the immediate resources for demonstrating claims of the form "If  proves

proves  , then ...". So our proof proceeds by induction.

, then ...". So our proof proceeds by induction.- Basis. Show: If

is a member of

is a member of  , then

, then  implies

implies  .

. - Basis. Show: If

is an axiom, then

is an axiom, then  implies

implies  .

. - Inductive step (induction on

, the length of the proof):

, the length of the proof):- Assume for arbitrary

and

and  that if

that if  proves

proves  in

in  or fewer steps, then

or fewer steps, then  implies

implies  .

. - For each possible application of a rule of inference at step

, leading to a new theorem

, leading to a new theorem  , show that

, show that  implies

implies  .

.

- Assume for arbitrary

Notice that Basis Step II can be omitted for natural deduction systems because they have no axioms. When used, Step II involves showing that each of the axioms is a (semantic) logical truth.

The Basis steps demonstrate that the simplest provable sentences from  are also implied by

are also implied by  , for any

, for any  . (The proof is simple, since the semantic fact that a set implies any of its members, is also trivial.) The Inductive step will systematically cover all the further sentences that might be provable—by considering each case where we might reach a logical conclusion using an inference rule—and shows that if a new sentence is provable, it is also logically implied. (For example, we might have a rule telling us that from "

. (The proof is simple, since the semantic fact that a set implies any of its members, is also trivial.) The Inductive step will systematically cover all the further sentences that might be provable—by considering each case where we might reach a logical conclusion using an inference rule—and shows that if a new sentence is provable, it is also logically implied. (For example, we might have a rule telling us that from " " we can derive "

" we can derive " or

or  ". In III.a We assume that if

". In III.a We assume that if  is provable it is implied. We also know that if

is provable it is implied. We also know that if  is provable then "

is provable then " or

or  " is provable. We have to show that then "

" is provable. We have to show that then " or

or  " too is implied. We do so by appeal to the semantic definition and the assumption we just made.

" too is implied. We do so by appeal to the semantic definition and the assumption we just made.  is provable from

is provable from  , we assume. So it is also implied by

, we assume. So it is also implied by  . So any semantic valuation making all of

. So any semantic valuation making all of  true makes

true makes  true. But any valuation making

true. But any valuation making  true makes "

true makes " or

or  " true, by the defined semantics for "or". So any valuation which makes all of

" true, by the defined semantics for "or". So any valuation which makes all of  true makes "

true makes " or

or  " true. So "

" true. So " or

or  " is implied.) Generally, the Inductive step will consist of a lengthy but simple case-by-case analysis of all the rules of inference, showing that each "preserves" semantic implication.

" is implied.) Generally, the Inductive step will consist of a lengthy but simple case-by-case analysis of all the rules of inference, showing that each "preserves" semantic implication.

are also implied by

are also implied by  , for any

, for any  . (The proof is simple, since the semantic fact that a set implies any of its members, is also trivial.) The Inductive step will systematically cover all the further sentences that might be provable—by considering each case where we might reach a logical conclusion using an inference rule—and shows that if a new sentence is provable, it is also logically implied. (For example, we might have a rule telling us that from "

. (The proof is simple, since the semantic fact that a set implies any of its members, is also trivial.) The Inductive step will systematically cover all the further sentences that might be provable—by considering each case where we might reach a logical conclusion using an inference rule—and shows that if a new sentence is provable, it is also logically implied. (For example, we might have a rule telling us that from " " we can derive "

" we can derive " or

or  ". In III.a We assume that if

". In III.a We assume that if  is provable it is implied. We also know that if

is provable it is implied. We also know that if  is provable then "

is provable then " or

or  " is provable. We have to show that then "

" is provable. We have to show that then " or

or  " too is implied. We do so by appeal to the semantic definition and the assumption we just made.

" too is implied. We do so by appeal to the semantic definition and the assumption we just made.  is provable from

is provable from  , we assume. So it is also implied by

, we assume. So it is also implied by  . So any semantic valuation making all of

. So any semantic valuation making all of  true makes

true makes  true. But any valuation making

true. But any valuation making  true makes "

true makes " or

or  " true, by the defined semantics for "or". So any valuation which makes all of

" true, by the defined semantics for "or". So any valuation which makes all of  true makes "

true makes " or

or  " true. So "

" true. So " or

or  " is implied.) Generally, the Inductive step will consist of a lengthy but simple case-by-case analysis of all the rules of inference, showing that each "preserves" semantic implication.

" is implied.) Generally, the Inductive step will consist of a lengthy but simple case-by-case analysis of all the rules of inference, showing that each "preserves" semantic implication.

By the definition of provability, there are no sentences provable other than by being a member of  , an axiom, or following by a rule; so if all of those are semantically implied, the deduction calculus is sound.

, an axiom, or following by a rule; so if all of those are semantically implied, the deduction calculus is sound.

, an axiom, or following by a rule; so if all of those are semantically implied, the deduction calculus is sound.

, an axiom, or following by a rule; so if all of those are semantically implied, the deduction calculus is sound.Sketch of completeness proof

(This is usually the much harder direction of proof.)

We adopt the same notational conventions as above.

We want to show: If  implies

implies  , then

, then  proves

proves  . We proceed by contraposition: We show instead that if

. We proceed by contraposition: We show instead that if  doesnot prove

doesnot prove  then

then  does not imply

does not imply  .

.

implies

implies  , then

, then  proves

proves  . We proceed by contraposition: We show instead that if